From gold mine to data mine: privacy concerns in the era of big data

ABSTRACT

The aim of this paper is to provide a general overview on Big Data analytics. It will focus on the relationship between analytics and data protection in the light of the GDPR.The first part of the document tries to generally introduce Big Data, its main features and its way of working. Moreover, it focuses on the risks possibly caused to data protection and privacy security. Secondly, the paper considers how Big Data are treated under the new GDPR. Finally, it develops general considerations on the topic.

***

Technological developments are quickly reshaping our reality, catapulting us in what was defined by Joseph Hellerstein, professor of Computer Science at the UC in Berkeley, as the “Industrial Revolution of Data”[1]. The world we live in is made of an unimaginable amount of digital information[2] – collected through computers, mobile phones, cameras, sensors and other high-tech devices. This condition gives us the opportunity to make things never thought before but, at the same time, we are being faced with a multitude of new issues, like ensuring data and privacy security.

Scientists and engineers, considering that this revolution treats data as a new source of information, have coined a new term to indicate this phenomenon: “Big Data”. According to the definition given by the Gartner’s glossary Big Data is “high-volume, high-velocity and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation”[3]. De facto, Big Data is often described through three features, also known as “3Vs”[4]: volume, velocity and variety[5].

Volume is related to the massive amount of information collected everywhere[6]. Velocity is linked both to the fast capacity of collecting data and processing and analysing it almost simultaneously. Variety is correlated to the different forms (video, images, records, texts, etc.) and sources[7] of data.

This massive amount of data is an incredible resource of information which could be used to make accurate decisions and predictions of reality. In particular, this is made possible thanks to the Artificial Intelligence (AI) by which the value of Big Data is unlocked. Indeed, AI has completely revolutionised the way to analyse data, adbandoning the standard linear method in favor of an inductive approach[8], capable of extracting predictive information about individuals and social groups. This process involves a “discovery phase” undertaken to find unforeseen correlations among different datasets. Furthermore, this process involves the largest amount of information possible; in fact, the sampling model has been outdated by a system where “n=all”[9]. Moving over this unllimited set of data and using this inductive capacity, it is not possible to define in advance the purposes of the research, keeping it open to unexpected findings[10].

This revolutionary way to treat data can lead to relevant beneficial results both in private and public sectors[11]. There are obvious commercial benefits for companies which use it to increase their profits and better assess the consumers’ demand, but it also helps the public sector to offer more efficient services, producing positive outcomes to improve the conditions of people’s lives[12].

However, a relevant part of big data operations are based on the processing of personal data of individuals[13] and this circumstance has raised some concerns with regard to the privacy and data protection rights of the individuals involved. Such a dark side of Big Data could not only affect personal privacy and security but also increase discrimination and injustice. Big Data tools present new ways to profile, classify, exclude, squeeze out and discriminate people from whom data are collected. This is made possible by the complexity of the entire process which is also characterized by high level of opacity. In fact, through the use of the alghoritms, individuals may not be always informed about the collection of their data, the way these are processed and how decisions are being made about them[14].

In this regard, it is interesting to evaluate if and how the General Data Protection Regulation (GDPR)[15], definitely coming into force on 25 May 2018, considers this phenomenon and what effects will have on it.

Firstly, it has to be pointed out that the GDPR will replace the current data protection legal framework based on the EU Data Protection Directive (95/46/EC)[16]. This last one has seeked to harmonise the protection of fundamental rights and freedoms of individuals in respect of processing activities and to ensure the free flow of personal data between Member States. Whereas, the GDPR tries to unify the implementation of data protection across the Union, in order to reduce the fragmentation created by the Directive and to maximise the economic productive potential of the digital economy.

Furthermore, in line with the Directive, the new Regulation estabilishes that the protection of natural persons, linked to the processing of personal data, is a fundamental right. On the other hand, it introduces a new approach of data protection which should be considered mostly as a per se value. The individual dimension of data protection is losing its supremacy, giving way also to its public dimension[17]. Thanks to that approach, the new Regulation has a double soul which provides an high level of flexibility[18], also from a technological perspective. In that regards, the GDPR does not specifically consider any kind of technology, neither the Big Data one.

The technological flexibility is in line with the neutrality of technology expressly affirmed by Recital (15) of GDPR “In order to prevent creating a serious risk of circumvention, the protection of natural persons should be technologically neutral and should not depend on the techniques used”[19]. So the new Regulation can be up with times.

However, even if the GDPR does not expressly consider or ban Big Data tools, they are tacitly taken into account by the new Regulation.

Indeed, Recital (71) and Article 22 affirm that “the data subject should have the right not to be subject to a decision, which may include a measure, evaluating personal aspects relating to him or her which is based solely on automated processing and which produces legal effects concerning him or her or similarly significantly affects him or her, such as automatic refusal of an online credit application or e-recruiting practices without any human intervention. Such processing includes ‘profiling’ that consists of any form of automated processing of personal data evaluating the personal aspects relating to a natural person, in particular to analyse or predict aspects concerning the data subject’s performance at work, economic situation, health, personal preferences or interests, reliability or behaviour, location or movements, where it produces legal effects concerning him or her or similarly significantly affects him or her”.

In this regard, profiling is defined by the Article 4 as: “any form of automated processing of personal data consisting of the use of personal data to evaluate certain personal aspects relating to a natural person, in particular to analyse or predict aspects concerning that natural person’s performance at work, economic situation, health, personal preferences, interests, reliability, behaviour, location or movements”. Thus, it is a primary tool for extracting value from Big Data.

Additionally, the GDPR provides that personal data may only be automatically processed when it is: 1) expressly authorised by Union or Member State law to which the controller is subject; 2) necessary for the entering or performance of a contract between the data subject and the controller; 3) authorised by the data subject through his or her explicit consent.

Moreover, the Regulation expressly emphasizes that where these exemptions apply, the processing must be fair and transparent and that: “in any case, such processing should be subject to suitable safeguards, which should include specific information to the data subject and the right to obtain human intervention, to express his or her point of view, to obtain an explanation of the decision reached after such assessment and to challenge the decision”.

Therefore, Article 22 takes into account Big Data analytics but it is one of the most undefine and uncertain provisions that leaves the full effect of the GDPR on Big Data analytics open to interpretation and need to be resolved before and through the implementation of the GDPR. Certainly, it reveals all the concerns related to automated decisions and its negative effects for the privacy.

These worries are confirmed by the provision of Article 35 (3) which requires privacy impact assessments everytime that a business is engaged in a “systematic and extensive processing” subject to Article 22. This provision effectively classifies such processing as a process which is “likely to result in a high risk to the rights and freedoms of natural persons“, according to the definition of Article 35.

On that regard, some of the privacy risks potentially caused by Big Data analytics include: 1) the processing of personal data outside of the original aim for which it was gathered; 2) the complexity of the methods involved, such as machine learning, can make it difficult for the data controller to be transparent about the processing of personal data itself; 3) the discrimination or bias against certain individuals resulting from the application of certain profiling algorithms[20].

In the light of the above, it has to be said that the GDPR surely poses some challenges in reconciling data protection principles set out in the GDPR with the characteristics of Big Data analytics. However, these are not insurmountable, nor incongruous with the aims of the new Regulation.

In fact, it is possible to make use of some compliance tools that can help organisations meet their data protection obligations and protect people’s privacy rights in such context, as for example the anonymisation of data.

Firstly, it could be considered that the anonymisation of collected data can be a successful tool that takes processing out of the data protection sphere and mitigates the risk of loss of personal data. Considering Recital (26), “the principles of data protection should therefore not apply to anonymous information, namely information which does not relate to an identified or identifiable natural person or to personal data rendered anonymous in such a manner that the data subject is not or no longer identifiable”. Acting like that, the privacy concerns posed by Big Data analytcs might be pratically avoid.

However, taking into account the massive amount of data gathered all around and considering the intrusive power of analytics processing, it is not possible to affirm with absolute certainty that an anonimysed data will be always anonimysed. In fact, crossing different datasets, the risk of re-identification[21] becomes relevant. So considering the characters of Big Data, the issue is not about eliminating the risk of re-identification but mitigating it until it will be no longer significant.

Furthermore, another point to consider is that many data controllers need to use not anonymised data, so what we have said above still remains valid only whether they do not need to use data which identifies individuals. In the opposite case, their treatments fall under the GDPR.

In that last case, we have to face up with some challenges for analytics and other automated processing that uses personal data as an input. In fact, considering also the deployment of Big Data under the EU’s Digital Single Market Strategy, it will not be a case of Big Data versus data protection but they should coexist togheter. So, it will be necessary to develope and implement other ways to reduce the risks and protect the personal data collected[22]..

Finally, it has also considered another dangerous consequence caused by the use of Big Data analytics. In that regard, it is necessary to consider that Big Data involves two different scenarios: an individual dimension, also known as micro scenario, and a collective dimension, called macro scenario.

According to this view, the first dimension concerns the way in which Big Data analytics affects individuals’ chances to assume aware decisions about the use of their personal information and affect individuals’ expectations of privacy[23], whilst the second dimension focuses on the the social impact linked to the use of Big Data analytics.

As mentioned above, the evolution of profiling through Big Data analytics uses a multitude of different variables to infer predictive information about people and about groups of people automatically generated by alghorytms. In many cases, the data subjects are not aware of it and of the impact that this profiling activity has on their life. The final result is that often the natural persons will be subjected to decisions based on group behaviour rather than on individuals one. This approach do not consider individuals as per se, but as a part of a group of people characterised by some common features. This practice is recorded not only into commercial sector but also into fields, such as security and public policies.

According to the vision of Professor Alessandro Mantelero[24]: “these groups are different from those considered in the literature on group privacy, in fact that they are created by data gatherers selecting specific clusters of information. Data gatherers shape the population they set out to investigate.They collect information about various people, who do not know the other members of the group and, in many cases, are not aware of the consequences of their belonging to a group”. So the issue related to privacy in this specific circumstance it is different from the issues of individual privacy and group privacy.

The importance of this new collective dimension depends on the fact that the approach to classification by modern algorithms focus on clusters of people with common characteristics and this is because the main interests of collectors is in studying group’ behaviour more than a single person.

The result of this phenomenon consists in some forms of discriminations which could be taken by data gathers on the base of clausters’ provisions created by analytics[25].

Additionally, the problem is also particularly significant because some discriminations are not necessarily against the law, in fact “they are not based on individual profiles and only indirectly affect individuals as part of a category, without their direct identification. For this reason, existing legal provisions against individual discrimination might not be effective in preventing the negative outcomes of these practices, if adopted on a collective basis”[26].

In that regard, the GDPR doesn’t seem to not deal with the problem and even the anonymisation of data could not be sufficient to avoid such a negative effect. In this circumstance, an ethical approach to the processing of personal data in a Big Data context could be useful. Also the European Data Protection Supervisor has suggested that, actually, adherence to the law might be not enough and it will be necessary to consider also an ethical dimension of data processing[27].

In conclusion, we have recognised the benefits generated by the use of Big Data both for individuals and society. Furthermore, we have also highlighed that these benefits will be effective only if privacy rights and data protection are embedded in the methods by which they are achieved. The concerns raised up regarding privacy in the era of analytics are numerous and the answers are still not define. Surely, the solution will involve a balance between the necessity to take advantages of Big Data economic value and the importance to ascertain a privacy protection. The time is now ripe and this result will request the cooperation of both national competition Authority and European Data Protection Board, as well as, all the operators in the technological fields.

BIBLIOGRAPHY

[1] J. Hellerstein, ‘The Commoditization of Massive Data Analysis’, Radar, 19 November 2008, http://radar.oreilly.com/2008/11/the-commoditization-of-massive.html. When the author wrote the paper he affirmed that “we’re not quite there yet, since most of the digital information available today is still individually “handmade”: prose on web pages, data entered into forms, videos and music edited and uploaded to servers. But we are starting to see the rise of automatic data generation “factories” such as software logs, UPC scanners, RFID, GPS transceivers, video and audio feeds”. So now, 10 years later, we are in the middle of this revolution.

[2] Just to give an idea of what we are talking about: when Max Schrems had decided to discover the amount of data collected by Facebook over a period of three years, exercising his “right to access” toward the US company, he received back a file that was over 1,200 pages long. See K. Hill, ‘Max Schrems: The Austrian Thorn In Facebook’s Side’, Forbes, 7 February 2012, https://www.forbes.com/sites/kashmirhill/2012/02/07/the-austrian-thorn-in-facebooks-side/#7ab5e6547b0b.

[3] See Gartner IT Glossary at https://www.gartner.com/it-glossary/big-data.

[4] However, there is someone who, starting from the traditional definition, considers also a “4th V”: veracity, which is directly related to the reliability of data. In truth, this last one should be thought of as a concept linked to the accountability: people who take decisions based on Big Data Analytics should know whether they can trust what the data is telling them. Looking at this way, veracity should not be considered as a Big Data’s attribute but like something to aim for.

[5] In the opinion of Sean Jackson this description has lost its meaning because of its overuse. See S. Jackson, ‘Big data in big numbers – it’s time to forget the ‘three Vs’ and look at real-world figures’, Computing, 18 February 2016.

[6] It is estimated that each day about 2,5 quintillion bytes of new data are created worldwide. See http://www.ibmbigdatahub.com/sites/default/files/infographic_file/4-Vs-of-big-data.jpg. K. Cukier, ‘Data, Data Everywhere. A special report on managing information’, The Economist, 27 February 2010, https://www.emc.com/collateral/analyst-reports/ar-the-economist-data-data-everywhere.pdf.

[7] There are three main sources of data which could come from: 1) communications between people, 2) interactions among people and things; 3) interconnections between things. This last phenomenon is described with the term “Internet of Things” (IoT), which is the network of physical objects that contain embedded technology to communicate and sense or interact with their internal states or the external environment.

[8] A. Mantelero and G. Vaciago, ‘The “Dark Side” of Big Data: Private and Public Interaction in Social Surveillance’, Computer Law Review international, 14 (6), 2013, p. 161.

[9] V. Mayer-Schönberger and K. Cukier, Big Data: A Revolution That Will Transform How We Live, Work and Think, John Murra, 2013.

[10] AI works through different technical mechanisms that underpin and facilitate AI: machine learning, hashing, crowdsourcing, etc. From the union of Big Data, AI and technical mechanisms was born the Big Data Analysis. To a technical approach see G. D’Acquisto and M. Naldi, Big Data e Privacy by Design, Torino, Giappichelli Editore, 2017.

[11] The European Commission has pointed out that the value of the EU data economy was more than €285 billion in 2015 and it might grow up to €739 billion by 2020. See the European Commission communication, ‘Building a European data economy’, 10 January 2017, https://ec.europa.eu/digital-single-market/en/policies/building-european-data-economy.

[12] For example, Big Data are used to improve the quality of public transport in London collecting scores of data through ticketing systems, surveys, social networks and more. In that way, bus routes were adapted and restructured considering travellers’ need, as well as bus stations were expanded to increase their capacity. L. Alton, ‘Improved Public Transport for London, Thanks to Big Data and the Internet of Things’, London Datastore, 9 June 2015, https://data.london.gov.uk/blog/improved-public-transport-for-london-thanks-to-big-data-and-the-internet-of-things/. L. Weinstein, ‘How TfL uses ‘big data’ to plan transport services’, Eurotransport,

20 June 2016, https://www.intelligenttransport.com/transport-articles/19635/tfl-big-data-transport-services/.

[13] In truth, it is also important to point out that there are also big data processing operations do not involve personal data as, for example, world climate and weather data used to predict the climate changes. See Working Party Article 29, ‘Statement on Statement of the WP29 on the impact of the development of big data on the protection of individuals regarding the processing of their personal data in the EU’, 14/EN WP 221, 16 September 2014.

[14] For a general overview of the harm that has been caused by uses of big data see J. Redden and J. Brand, Data Harm Record, Data Justice Lab, 07 December 2017.

[15] Regulation (EU) 2016/679 of the European Parliament and of the Council, 27 April 2016, Official Journal L 119, 4.5.2016, p. 89–131.

[16] Directive 95/46/EC of the European Parliament and of the Council, 24 October 1995, Official Journal L 281, 23/11/1995 P. 0031 – 0050.

[17] This consideration is confirmed by a joint reading of articles 33 and 34 of Regulation n. 679/2016. According to their content, “the controller shall without undue delay and, where feasible, not later than 72 hours after having become aware of it, notify the personal data breach to the supervisory authority competent” and only “when the personal data breach is likely to result in a high risk to the rights and freedoms of natural persons, the controller shall communicate the personal data breach to the data subject without undue delay”. Pointing out that the breaches’ notification is always due only to the national competent authority and not also to the data subject, this lecture confirms the major role played by the data protection’s public dimension.

[18] This circumstance is also shown the Chapter IV of the Regulation which introduces the central role assigned to the concept of “privacy by design”. According to the art. 25 (1): “Taking into account the state of the art, the cost of implementation and the nature, scope, context and purposes of processing as well as the risks of varying likelihood and severity for rights and freedoms of natural persons posed by the processing, the controller shall, both at the time of the determination of the means for processing and at the time of the processing itself, implement appropriate technical and organisational measures, such as pseudonymisation, which are designed to implement data-protection principles, such as data minimisation, in an effective manner and to integrate the necessary safeguards into the processing in order to meet the requirements of this Regulation and protect the rights of data subjects”.

[19] G. Buttarelli, ‘I big data, la sicurezza e la privacy. La sfida europea del GDPR’, Formiche, 26 December 2017, http://formiche.net/2017/12/26/big-data-la-sicurezza-la-privacy-la-sfida-europea-del-gdpr/.

[20] Oana Dolea, ‘GDPR and Big Data – Friends or Foes?’, Lexology, 03 August 2017, https://www.lexology.com/library/detail.aspx?g=a1f04474-5bbe-4d96-9c90-f03a2dd9b5a8.

[21] It is pointed out, by some commentators, that it is possible to identify individuals in anonymised datasets and, for this reason, anonymisation is becoming increasingly ineffective. See President’s Council of Advisors on Science and Technology, ‘Big data and privacy. A technological perspective. White House’, May 2014 http://www.whitehouse.gov/sites/default/files/microsites/ostp/PCAST/pcast_big_data_and_privacy_-may_2014.pdf.

[22] The GDPR introduces some tools of compliance such as the privacy notice, the privacy impact assessment, the mentioned above privacy by design or the pseudonymisation.

[23] This dimension was mostly introduced in the first part of the paper.

[24] A. Mantelero, ‘Personal data for decisional purposes in the age of analytics: From an individual to a collective dimension of data protection’, Computer Law & Security Review, 2016, pp. 238-255.

[25] For example, American Express used purchase history to adjust credit limits based on where customers had shopped. The case regards a man who found his credit rating reduced from $10,800 to $3,800 because American Express, through a profiling activity, had considered him as part of a group made by “other customers who had used their card at establishments where [he] recently shopped have a poor repayment history with American Express”. See M. Hurley and J. Adebayo, ‘Credit scoring in the era of big data’, Yale Journal of Law and Technology, 2016, p.151. however, there are some discriminations, based on race, religion, sexual preferences or status, which affects the individuals even in a more intrusive way.

[26] See A. Mantelero, ‘Personal data for decisional purposes in the age of analytics: From an individual to a collective dimension of data protection’, Computer Law & Security Review, 2016, pp. 238-255.

[27] European Data Protection Supervisor, ‘Towards a new digital ethics’, Opinion 4/2015, EDPS, September 2015,https://secure.edps.europa.eu/EDPSWEB/webdav/site/mySite/shared/Documents/Consultation/Opinions/2015/15-09-11_Data_Ethics_EN.pdf.

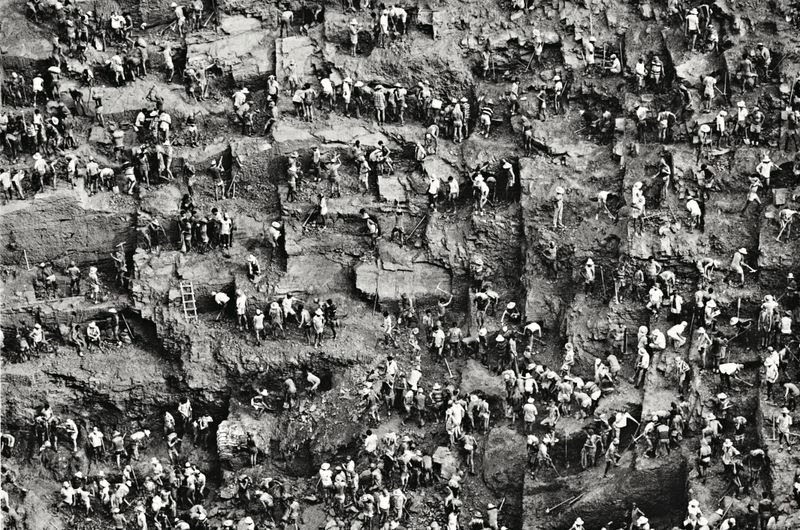

Photo: Sebastial Salgato, Sierra Pelada

Salvis Juribus – Rivista di informazione giuridica

Direttore responsabile Avv. Giacomo Romano

Listed in ROAD, con patrocinio UNESCO

Copyrights © 2015 - ISSN 2464-9775

Ufficio Redazione: redazione@salvisjuribus.it

Ufficio Risorse Umane: recruitment@salvisjuribus.it

Ufficio Commerciale: info@salvisjuribus.it

***

Metti una stella e seguici anche su Google News

The following two tabs change content below.

Marina Pecoraro

Ultimi post di Marina Pecoraro (vedi tutti)

- From gold mine to data mine: privacy concerns in the era of big data - 6 Aprile 2018

- Il frazionamento del credito dopo la sentenza n. 4090/2017 - 3 Settembre 2017